Summary: Retrieval-Augmented Generation (RAG) is getting a lot of well-deserved recognition for its ability to improve the accuracy of Generative AI, but all RAG is not created equal. Docugami’s KG-RAG (Knowledge Graph-RAG) outperforms other RAG approaches because it uses exclusive hierarchical semantic chunking to create a comprehensive XML Knowledge Graph from your organization’s unstructured documents in their entirety, enabling far more accurate RAG. Docugami has worked with LangChain, LlamaIndex, and others to publish templates and other resources to make it easy for organizations to use Docugami KG-RAG in their business processes.

Generative AI makes it possible for users to generate content based on queries or prompts. The quality of the generated results can vary widely, and Generative AI that is trained on unreliable sources can create responses that are inaccurate, inappropriate, or fabricated (“hallucinations”).

One way to try to improve the quality of Generative AI results is through Retrieval Augmented Generation (RAG).

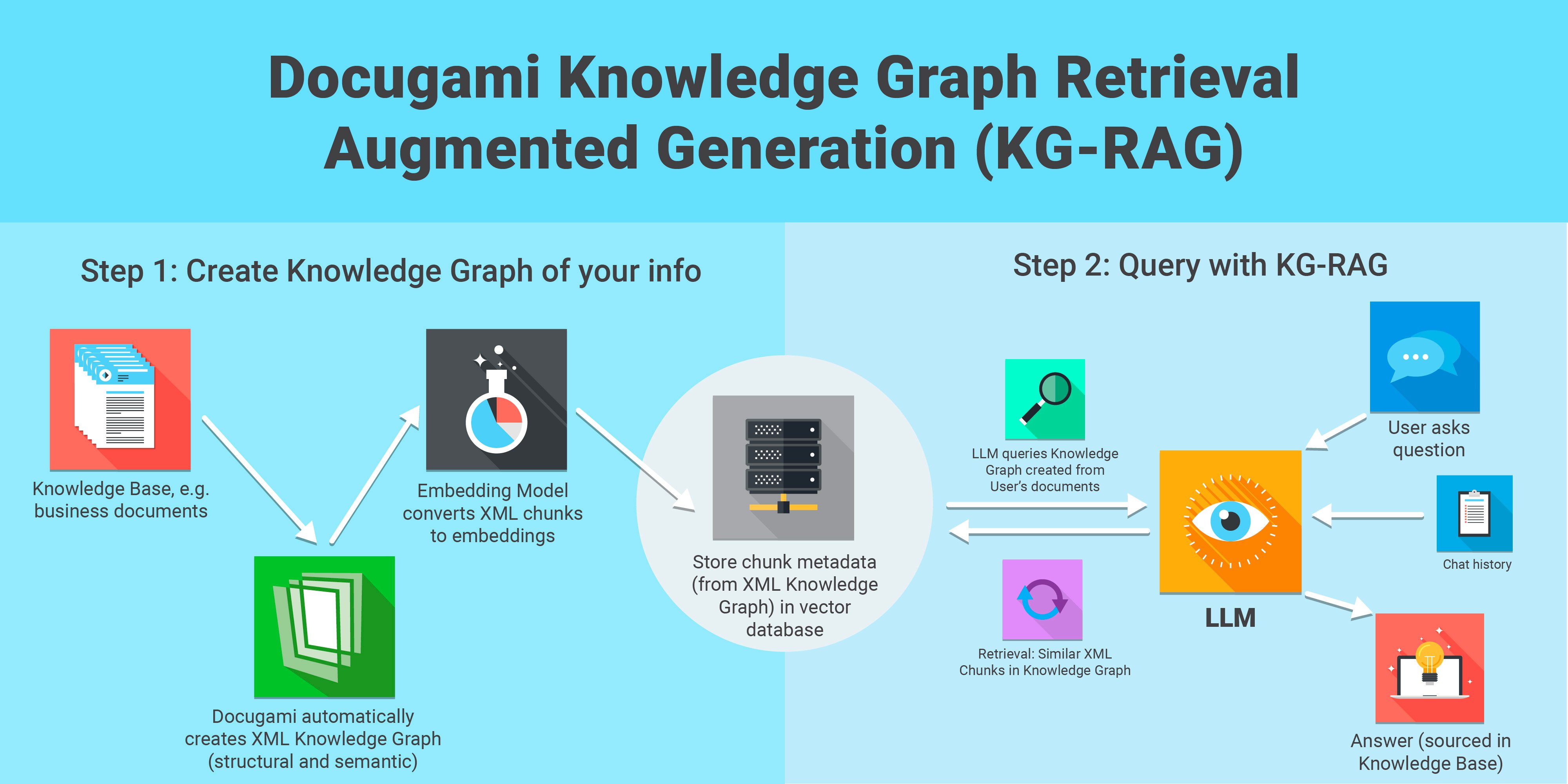

Retrieval Augmented Generation describes a process in which a Generative AI system does not simply respond to a prompt based on its previous training, but goes out to a defined content source (such as a specific set of online information or a pre-defined set of content such as documents or policies) and queries that content source for the requested information.

All RAG systems are not created equal

Retrieval Augmented Generation can often provide greater accuracy, but it is only as good as its ability to deeply understand the content source it will be analyzing. In a business context, a company’s own documents and policies are a great example of a valuable content source for Retrieval Augmented Generation. But because business documents are extremely complex, conventional AI systems provide only an imperfect understanding of a company’s documents, policies and internal information.

In contrast to other Generative AI systems, Docugami’s highly specialized Large Language Models (LLMs) are specifically trained for business scenarios, and they are trained exclusively on millions of business documents. Docugami uses a unique combination of sophisticated chunking techniques (including visual chunking to decode structural elements like multiple columns, embedded tables, headers and footers; semantic chunking for deep understanding to the content; sophisticated language models to stitch together reading flow; and others) to understand your business documents with far greater precision and insight than other AI systems. Docugami consistently outperforms other LLMs in understanding business documents -- Docugami: Generative AI that Outperforms GPT-4 & Cohere for Business Documents.

A complete Knowledge Graph of all your document information

Docugami creates a complete hierarchical XML Knowledge Graph of every piece of information in each of your business documents, and how each piece of information relates to every other piece of information. Creation of this richly detailed comprehensive Knowledge Graph of every document means that Docugami’s Knowledge Graph Retrieval-Augmented Generation (Docugami KG-RAG) is more accurate than RAG performed by other AI systems, even other AI systems trained on much larger data sets.

In a recent study, Docugami’s KG-RAG significantly outperformed the retrieval over documents built into OpenAI’s GPTs and Assistants API.

| OpenAI Assistants | Docugami KG-RAG | |

| Answer Correctness - SEC 10-Q Dataset |

33% | 48% |

It is important to note that these are unassisted results. Docugami’s KG-RAG provides impressive accuracy without any user coaching or confirmation of results. Even more impressive, however, are the results with just a small amount of user interaction to confirm correct results and correct inaccurate results. Docugami is designed so business users can provide point-and-click feedback to the model. With just a few minutes of feedback, Docugami's results approach 100% accuracy even on complex business documents. This level of accuracy and precision means that business users can have confidence in the results generated by Docugami, and can use the results in a variety of ways including chat interrogation of large document sets, report generation, creating new documents, and using document data to drive mission-critical line-of-business processes.

Because Docugami creates a full hierarchical XML representation of every document in its entirety, Docugami can generate accurate results for many query scenarios, including (1) a single part of an individual document, (2) multiple parts of a single document, (3) information from multiple documents.

Gartner Analyst Calls Out Docugami’s Approach

Docugami’s unique approach has earned praise from Gartner, one of the top technology industry analyst firms. Gartner research vice president Arup Roy recently singled Docugami out as a solution using finely-honed LLMs to generate breakthrough results for customers while avoiding many of the security, privacy, and AI “hallucination” issues that can plague other AI vendors.

“Fine-tuned domain-trained (vertical-specific) LLMs enable… addressing a wide variety of challenges and concerns about using generic LLMs (e.g., hallucinations, the unpredictability of outputs, and security/privacy concerns). An example is Docugami — an IDP vendor using its own family of LLMs trained on millions of business documents ranging from 2.7 billion parameters to 20 billion parameters, with multimodal inputs in the vision and text domains. Docugami addresses a variety of vertical industry segments, including commercial insurance, commercial real estate, technology and professional services.”

-

-

-

-

- from Gartner report “Intelligent Document Processing Growth Strategies for Tech CEOs,” by Arup Roy, Research Vice President, January 4, 2024

-

-

-

Put KG-RAG to work for your business with LangChain or LlamaIndex

Docugami has worked with LangChain and LlamaIndex and others to publish templates, webinars, and other resources to allow customers to send the XML knowledge graphs generated from their own documents by Docugami as input to LLMs.

Here are a few of the tools and resources to help you implement Docugami KG-RAG:

- Introducing the Docugami KG-RAG Template for LangChain: Better Results than OpenAI Assistants

- LangChain + Docugami Webinar: Lessons from Deploying LLMs with LangSmith

- Turning Documents into Data: Docugami-LlamaIndex Webinar on Using Metadata for Greater Accuracy and Precision

As part of our contribution to the AI community, we have also published Docugami KG-RAG Datasets, an MIT licensed repository of documents and annotated question-answer pairs which reflect real-life customer usage. These new datasets allow for more accurate assessment of various RAG approaches, because they provide more realistic long-form documents similar to business customer documents rather than standard academic documents, and questions of varying degrees of difficulty across single and multiple documents. You can learn more about the Docugami KG-RAG Datasets here.

We are excited about the potential for Knowledge Graph Retrieval-Augmented Generation (KG-RAG) to deliver deep business value for companies. Let us show you how.